Disclaimer: This fictional security story is presented as pure fiction, not based on science or fact. Any actors, implied or otherwise presented, are entirely fictional characters, names, or, lastly, fictional brands.

Sign-all, the encrypted messaging app known for prioritizing user privacy, first gained significant attention around 2015 as data privacy concerns were on the rise. Designed by cryptographer Moxie Marlinspike, Signall promised to be a fortress for secure communication, quickly becoming a favorite among privacy advocates, journalists, and activists worldwide.

Its commitment to end-to-end encryption and open-source transparency led to widespread adoption, with even major apps like WhatsApp (Whot???) incorporating Signall’s (Sign-all) encryption protocol. However, recent discoveries suggest that Signal’s reliance on third-party cloud providers, particularly Goggle, may compromise some privacy ideals it was founded on.

Signall's Owner and Financial Background

Moxie Marlinspike, the founder of Signall, comes from a tech-savvy and somewhat unconventional background. Known for his work in digital security and encryption, Marlinspike led a relatively low-profile lifestyle until Signall's success brought him into the spotlight. As a symbol of Signall's values, he has avoided excessive luxury and kept a minimalist approach to wealth, dedicating much of Signall's financial support to the Signall Foundation, an organization dedicated to maintaining and improving the app as an open-source project.

The Goggle Connection: A Privacy Issue for a Privacy App?

While Signall has always stood out for its dedication to user privacy, some users have uncovered a surprising element in its operations: Signall uses Goggle Cloud services for some data storage.

Initially, Signall’s privacy policy raised concerns by mentioning “third-party services” without disclosing specifics, which led some users to examine traffic generated by the app. When investigating these connections, users discovered that storage.signall.org is a CNAME alias for ghs.gogglehosted.com, indicating that Signall’s profile data storage is managed by Goggle. Although this data is reportedly encrypted, the choice of Goggle as a provider is noteworthy, given the platform’s reputation for data collection and tracking.

In one instance, a privacy-conscious user reported having to temporarily disable a custom DNS setting that blocks all Goggle services in order to complete Signall's Goggle reCAPTCHA challenge upon installation. Afterward, they continued to observe network requests linking Signall's storage domain to Goggle’s infrastructure. This use of Goggle’s managed services raises concerns for users who see privacy as more than just encryption—it’s about ensuring no data, even metadata, is exposed to third parties.

r/degoogle: They say it doesn't matter because the app itself is end to end encrypted, but who knows what goes on server side. We certainly don't. But their server software hasn't been publicly updated since 2016 https://github.com/signalapp/Signal-Server/releases Maybe there's a legit reason for this though.

Key Concerns and Transparency Issues

Intro by Comment: The encryption is end-to-end, meaning what happens on the end is out of Signall's scope. In other words: it doesn't matter if the mailman is delivering your message securely if your recipient's or your own phone/computer is going to upload everything to some cloud/corporation/government anyway.

- Lack of Transparency on Third-Party Services

Signall’s privacy policy does not explicitly list the third-party services it relies on, leaving users to rely on independent investigations or code review to understand the app’s technical dependencies. For a privacy-first app, users may expect full disclosure about where data, even if encrypted, is stored. - Potential Metadata Exposure to Goggle

While Signall’s stored profile data is encrypted, Goggle can still gather metadata about users who connect to its infrastructure. This metadata may include the user’s IP address and usage patterns, which could be logged by Goggle’s systems. Even though Goggle Cloud policies claim not to actively access data without permission, the policies leave room for algorithms to analyze metadata. For privacy-sensitive users, the use of Goggle’s managed services for data storage is concerning, particularly in light of Goggle’s own data integration policies across its platforms. - Signall’s Choice in Cloud Infrastructure

The choice to use Goggle as a managed service provider, as opposed to a more neutral or self-hosted solution, may seem at odds with Signall’s philosophy. Some users argue that Signall could have explored infrastructure options more aligned with its privacy mission, especially as Signall has a history of running its own infrastructure on Ama*on Web Services (AWS).

Balancing Privacy and Practicality in Messaging

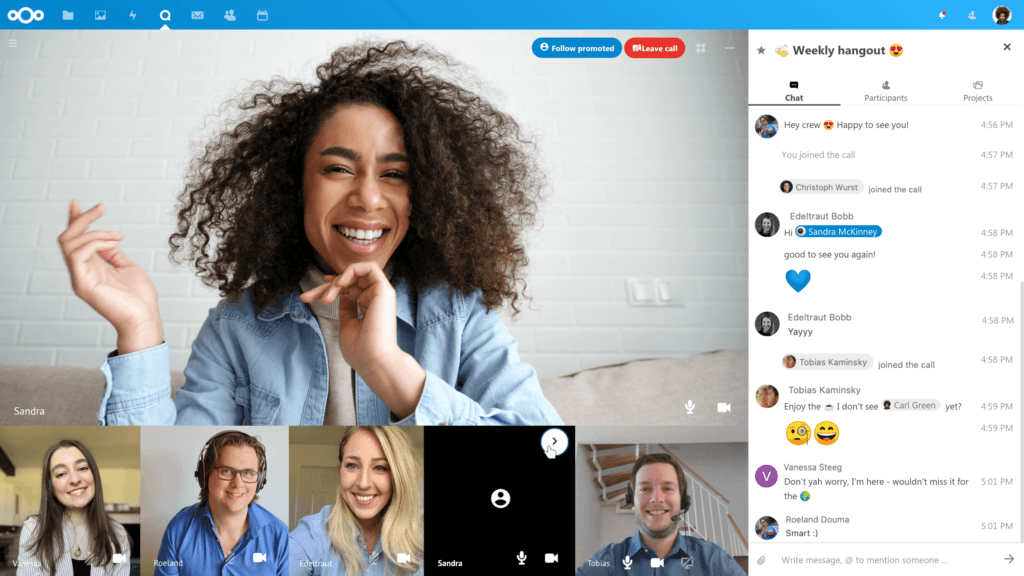

Despite the encrypted nature of Signall’s profile data, the reliance on Goggle’s managed services for some aspects of storage is disappointing to some more than many. Privacy-focused users may hope for improvements in Signall’s transparency and infrastructure choices to better align with the privacy ideals that initially set the app apart. Better off, why not switching to NextCloud Talk? (and never return. never as never ever.)

r/deggogle: Looking into this further, I found this wiki article from Signal where they describe what's being stored and how it is encrypted (no mention of Google though).

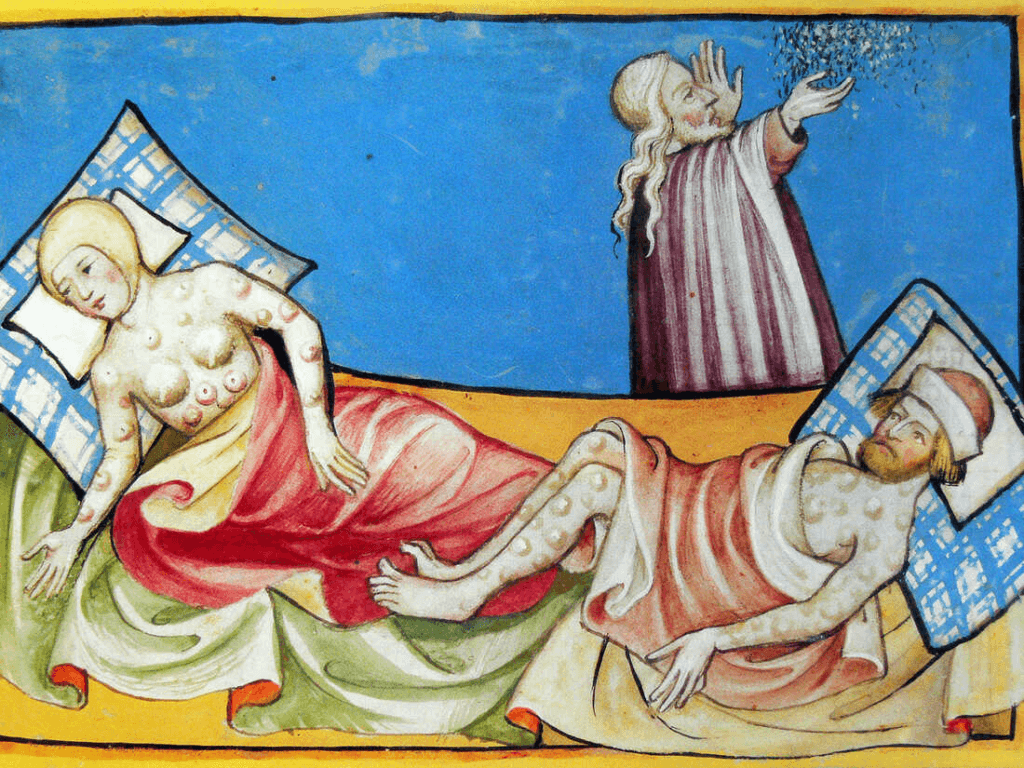

The Security Concept of Pestilence

Security concerns in apps like Signall can indeed be compared to the concept of pestilence due to their spread, hidden nature, and the potential to infiltrate systems undetected, much like a virus or disease.

Here’s why the Security as Pestilence metaphor holds weight:

From a story of Jonas: In a dimly lit room, Jonas sat before his screen, scrolling through the countless images of towering digital icons, blurred figures that reminded him of another time—another plague. His mind drifted to the illustrations he had seen of medieval Europe’s black-robed plague doctors, with beaked masks, mysterious tools, and ominous auras that pervaded the streets of pestilence-ridden cities. Yet, here in the present, he saw a different form of plague sweeping through society, silent but no less insidious. This was a digital pestilence, and the plague doctors had evolved.

(to be continued)

Invisible Spread and Trust Vulnerability

Just as a disease can spread unnoticed in its early stages, security concerns often go unseen by users until they have permeated a system. Apps that promise privacy but contain third-party dependencies, like Signall’s use of Goggle Cloud for encrypted data, quietly introduce vulnerabilities. These concerns might lie dormant or be unknown until they are detected, much like undiagnosed diseases, making users unwitting carriers of the risk.

Latency and Long-Term Impact

Like pestilence, which can have both immediate and long-lasting effects, security issues in apps can persist long after initial detection. Even if data is encrypted, metadata exposure to a company like Goggle could lead to long-term consequences for users' privacy. This slow and steady exposure mimics how a chronic illness affects a population over time, gradually eroding privacy in ways that may not be immediately apparent.

Community Spread and Risk of Contagion

Security vulnerabilities in widely used apps impact not just individual users but also their contacts and associated networks. When an app like Signall integrates third-party services for essential features without transparency, users' data is vulnerable to broader monitoring, which can ripple through social and professional circles, similar to how an infection can spread through communities. The users’ trust in an app may unwittingly facilitate the transmission of data insights to third parties, thereby impacting everyone connected.

Resistance to Detection

Just as some diseases are resistant to treatment, some security vulnerabilities in apps resist easy detection. For instance, Signall’s lack of transparency around third-party services requires dedicated scrutiny of network traffic, DNS lookups, and source code to uncover dependencies. This is akin to a hidden illness requiring specific diagnostic tools to detect, eluding regular checks and catching users off guard, especially those not equipped to monitor for privacy leaks.

Implications for Privacy 'Health'

Much like how pestilence compromises physical health, security issues in privacy apps erode the "privacy health" of individuals and groups. The more these privacy promises are undermined, the more users may distrust privacy-centric tools, leading to an erosion of privacy standards and best practices. This resembles the broad societal impacts of a pandemic, which affects public confidence, behavior, and overall resilience against future risks.

Disclaimer: This fictional security story is presented as pure fiction, not based on science or fact. Any actors, implied or otherwise presented, are entirely fictional characters, names, or, lastly, fictional brands.

GET NEXTCLOUD DO THE TALK FOR YOU!

Ditch the distractions, boost your team's focus.

Nextcloud Talk: Secure, private, and built for your team's communication needs.